The Shortcut to integrating Private Cloud Compute into my app

Apple’s Private Cloud Compute is pretty cool - it lets you use Apple’s cloud LLMs with strong privacy guarantees, and it's a much more capable LLM than their on-device models. Best of all? So far, it seems to be completely free.

But if you’re a developer, you might have noticed something odd: there’s no public API for Private Cloud Compute, and this is a shame given that their new Apple Foundation Model API is fantastic, and super well designed. There's no way to integrate it into your app, or even use it from the command line. Or is there...?

The Shortcut (literally)

Here’s the twist: Private Cloud Compute is available to users via Shortcuts. That means, if you're a Mac app developer, then with a little creativity, you can actually call PCC from your own code, by wrapping it up in a Shortcut and invoking it from your app. (I'm curious, is there a way to do this on iOS? Do apps have the ability to call arbitrary Shortcuts...?)

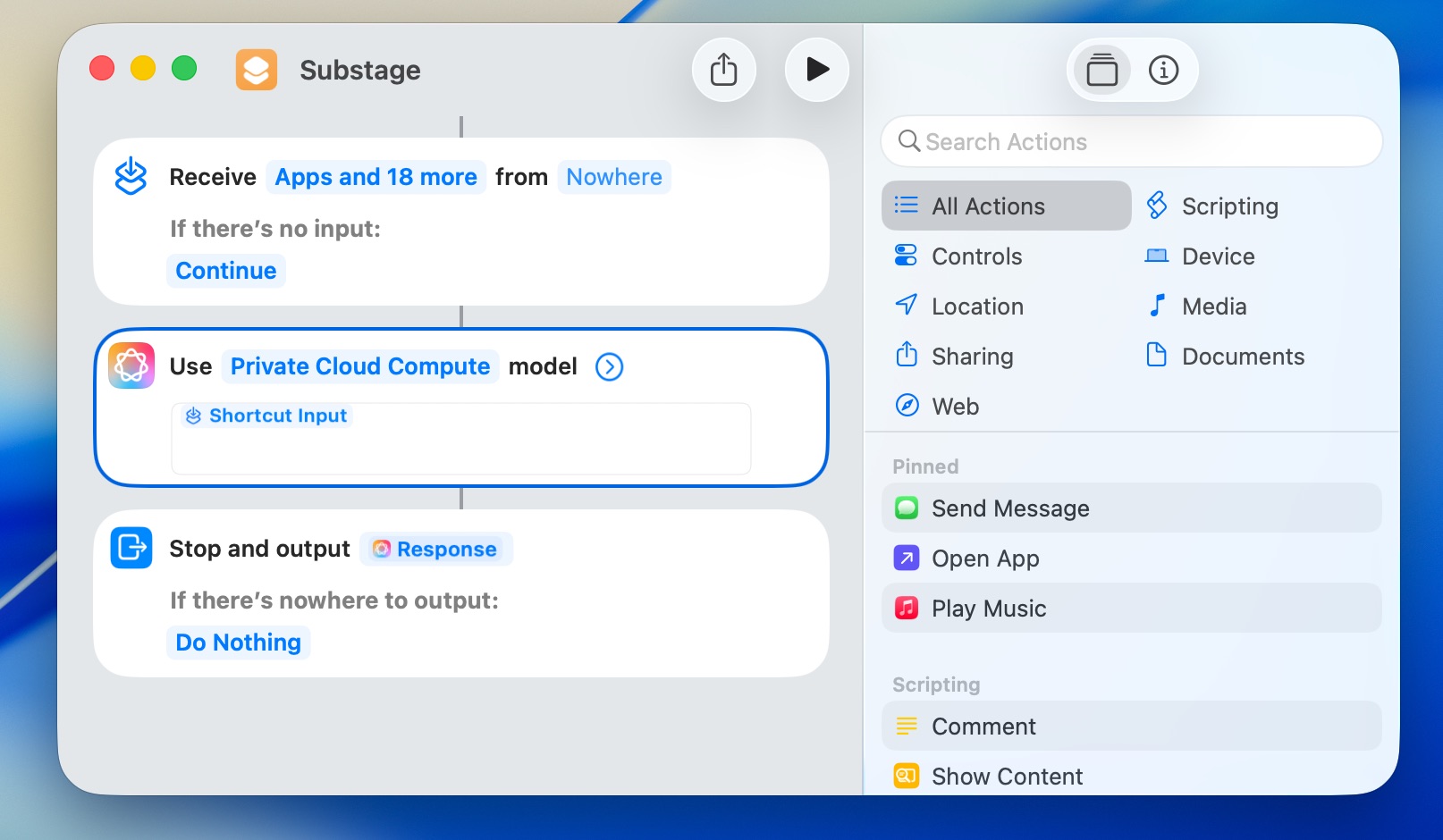

I’m not a Shortcuts power user by any means, but after a bit of tinkering, I managed to put together a super simple Shortcut that takes a prompt as input, runs it through Private Cloud Compute, and outputs the result. Here’s what it looks like:

The Shortcut itself is just a couple of actions: it takes the input, passes it to the “Use Model” action with the PCC model selected, and returns the result. That’s it!

Calling Shortcuts from Swift

Now, how do you call this Shortcut from your own app? Turns out, macOS ships with a handy command-line tool: /usr/bin/shortcuts. You can use it to run any Shortcut, pass in input, and capture the output.

So, in my Swift app I simply used Process to run the shortcuts command-line tool and then returned the output, something like this:

let process = Process()

process.executableURL = URL(fileURLWithPath: "/usr/bin/shortcuts")

process.arguments = [

"run",

"Substage-PCC",

"-o", pccOutputPath

]

This approach lets me effectively use Private Cloud Compute as an AI model within Substage. I’ve been experimenting with it, and so far, its performance feels comparable to an average 8 billion parameter open source model. Fine for general tasks, in my experience it doesn’t quite match the coding abilities of specialized models like Qwen Coder 7b. That said, I'm currently reusing the same prompt that I use for other models, and maybe it could do with some tweaks to improve its accuracy. It’s also noticeably slower and less capable than something like GPT-4.1 Mini.

But there’s a big upside: for the user, it’s not just cheap - it’s completely free, with no tokens to buy or API keys to manage. (Presumably though, Apple must have put in some fair use restrictions somewhere?)

From a user’s perspective, the setup won't be entirely seamless though. To get started, they’ll need to agree to add the Shortcut to the Shortcuts app (and err, not mess with it?), and the first time it runs, macOS will likely prompt them to grant permission for automation. It’s a couple of extra steps, but once set up, it works reliably in the background without needing to be prompted again.

Overall, while it’s not a drop-in replacement for the fastest or most capable cloud models, this method is a fun way to tap into Apple’s privacy-preserving AI for your own workflows... at least until Apple adds support officially via API. I think I'll hold back from doing more work to integrate it until I can be sure that the user experience can be streamlined, or at least closer to September when macOS Tahoe is released, since maybe they'll add an official API by then.

If you try this approach, let me know how it works for you, or if you find any clever ways to improve the user experience!