Substage subscriptions include access to ALL of our AI models (OpenAI, Anthropic, Google and Mistral) with no setup required, or you can buy a permanent Bring your own AI license to use your own LLMs instead.

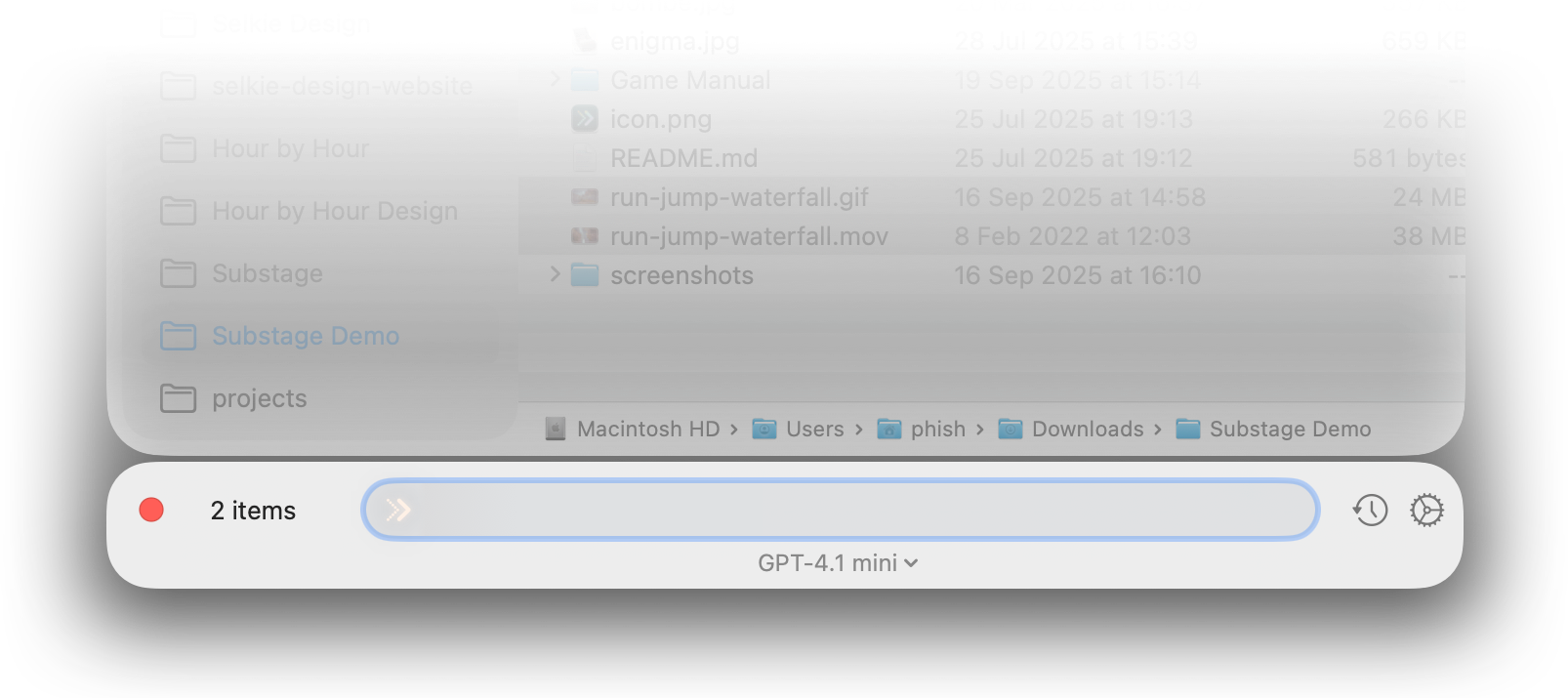

Substage knows what files and folders you have selected in Finder. Just describe what you want to do, and it figures out the rest.

Type what you want in plain English. Substage translates your request into the right Terminal commands, which is automatically analysed and can be confirmed before running.

Every generated command is analyzed before it runs with a sophisticated dry run system that can simulate complex Terminal commands. Substage warns you about side effects like file modification, deletion or system changes, so there are no surprises.

Designed for quick commands, not drawn-out agentic workflows. Smart, targeted prompts and intelligent pre-analysis of your request help small models like GPT 4.1 Mini nail the right answer first time, every time.

Common tasks like image and video conversion, zipping and unzipping, run instantly without waiting for AI - just type "jpg" or "zip" and it's done in a split second.

We love fast, lightweight models like GPT 4.1 Mini for snappy command execution. But you can also connect to local models via Ollama or LM Studio, or configure a custom setup - whatever works best for you.

Press the up arrow on your keyboard to cycle through previous commands and reuse them on different files. No need to retype common tasks.

Summon Substage from anywhere with a customizable global shortcut. One keypress toggles the window docked beneath your Finder - always ready when you need it, out of the way when you don't.

Choose between a one-time purchase where you bring your own API key, or go all-inclusive with built-in access to top frontier AI models. See pricing options.

Substage is free to try for 2 weeks.

Substage subscriptions include access to ALL of our AI models (OpenAI, Anthropic, Google and Mistral) with no setup required, or you can buy a permanent Bring your own AI license to use your own LLMs instead.

The Setapp version of Substage includes free usage of GPT 4.1 mini, which is perfect fit for general usage: It's super snappy, and deals with the majority of Substage uses perfectly. Or you can choose to use your own API keys and local models.

Looking for ideas? Here are some more example commands to try.

For quick conversions you can just type a format name (e.g., “mp4”, “wav”). Some advanced operations use optional extra tools that Substage can install automatically via the Homebrew package manager (with your confirmation).

Short prompts work: say just the target format (e.g., “jpg”, “png”). Some image operations can use optional tools like ImageMagick, installed via Homebrew on request.

Substage answers maths questions using the built‑in bc calculator on your Mac. We translate your prompt into an exact expression and evaluate it locally with high precision—so you get deterministic results without any AI “best guesses”.

Create and inspect archives.

Quick text utilities and editing.

Sort and tidy folders in just a few words.

Convert between document formats. Substage can use Pandoc for best‑in‑class conversions, and can install it automatically via Homebrew (with your confirmation).

Advanced PDF tools use optional extra utilities such as QPDF, Ghostscript and Poppler that Substage can install automatically via Homebrew (with confirmation).

Inspect file types, sources and permissions.

Anything else I should add to this list? Email me or discuss on the Discord!

Substage helps you work with files and folders on your Mac by translating natural language into terminal commands. To do this, it sends your prompt - plus some context - to AI providers such as OpenAI, Anthropic, Mistral, and Google.

By default, Substage also includes the paths of selected files and folders. This allows the AI to make more relevant suggestions - for example, proposing the name "screenshots.zip" when you select files named "screenshot1.jpg" and "screenshot2.jpg." That said, we know even filenames can be sensitive, and we're exploring ways to reduce what gets shared - such as sending only file extensions instead.

Substage never directly accesses or sends file contents. However, when summarising Terminal output, some content may be included. For instance, if you run a command that prints a file's contents and ask Substage to summarise the result, that content will be processed by the AI. Substage doesn't store any of this data itself.

Substage integrates with leading AI providers like OpenAI, Anthropic, Mistral, and Google. Each of these services has similar privacy policies - they typically retain data for a limited period for purposes like abuse monitoring, but state they don't use this data for model training.

For more privacy-conscious workflows, Substage also supports local AI models via tools like Ollama or LM Studio - though this requires additional setup and isn't the default experience.

We're always working to strike the right balance between usefulness and privacy, and we'd love to hear your thoughts or suggestions. You can:

Yes! Just add a custom model in the Substage settings. Use https://openrouter.ai/api as the base URL, and enter your OpenRouter API key. For the model, enter something like openai/gpt-4o-mini.

Yes! Just add a custom model in the Substage settings. Use https://api.perplexity.ai/chat/completions as the base URL, create a new API key at https://www.perplexity.ai/account/api/keys, use the copy button there, and paste it into Substage. Ensure you enter “sonar” as the model name, and anything you like as the friendly name, such as “Perplexity”.

After hiding the Substage bar, it can be shown again via the menu bar item at the top of the screen, or by using the global shortcut, which by default is CTRL-SPACE.

Still not working...?

Sorry about that! There aren’t many good options for keyboards shortcuts left that don’t already conflict with something else! You can reconfigure Substage’s global shortcut in its settings.

You could try:

In addition, right now Substage can’t see Smart Folders in Finder such as Recents, or Search views yet, though I’m hoping to add support in future.

You can manage your Substage subscription by clicking the View Subscription button in your original purchase email. If you have any trouble, please contact me and I'll be happy to help!

If you see a message saying you've reached your activation limit, you can manage your activations yourself:

If you have any trouble, just contact me and I'll be happy to help!

No, Substage currently does not integrate with Finder replacement apps. It relies on the standard macOS Finder for file selection and operations. Compatibility with third-party Finder alternatives may be considered in the future, but is not supported at this time.

Substage uses AI to translate your natural language prompts into Terminal commands, and sometimes it might not get things quite right. If something didn't work as expected, please check out the Limitations section below for more details on what Substage can and can't do.

I'm always interested to hear what works well and what doesn't! If you ran into a problem, please let me know. I can often give the AI better hints or improve how Substage handles certain requests. Your feedback helps make Substage smarter for everyone. Feel free to discuss on Discord!

Newsflash! AI can make mistakes. 😱

For most commands, we highly recommand small, fast models such as GPT 4.1 mini. Substage is intended to be used for quick individual operations, such as file conversion, and with small AI models, this can be a quick and snappy experience.

As soon as you increase the complexity of your request, things can get more unreliable. We recommend that more complex requests are only done by developers and tech-savvy users who understand the Terminal commands that are generated.

In order to understand Substage's further limitations, it's worth reviewing how it works under the hood:

Given that it's primarily a one step process, it's important to understand that the following will not work:

In addition, more complex operations aren't entirely recommended, for example if you describe multiple steps in a single prompt. It's best to describe each step separately, and run them one at a time. For example:

We don't currently have integration with AI providers beyond the described Terminal command conversion process. So, for example, you can't ask Substage to:

And finally, a quick fire round of things that won't work in Substage:

Loading latest posts...